Lesson Learned from Developing an AI Chatbot on AWS

AI Chatbot

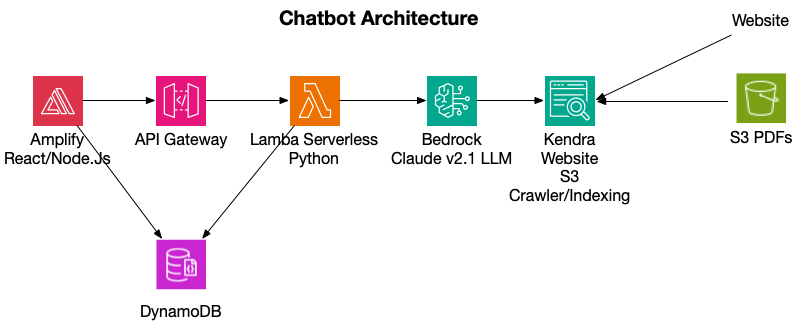

With AI everywhere, I wanted to learn more about how AI works, large language models (LLMs), and prompt engineering. I wanted to go beyond just using OpenAI ChatGPT. I started with building a basic AI chatbot to answer questions based on various data sources such as websites, S3, and external sources.

Where to begin?

To build the chatbot, I started by crawling and indexing the website from which I wanted to capture data for the model. I could have developed a Lambda Python function to crawl the website and store the index in a vector store for the LLMs, but I opted to take advantage of existing AWS Services,Kendra, in this case, to crawl and index the website. Kendra is ideal because there are modules and libraries available for Bedrock. The other benefit is that Kendra has other connectors, such as S3 for PDFs and other documents, Confluence, and database support, such as RDS. While all this is possible without Kendra, even at a lower cost, using the service helps with development and ongoing support. The real value of code is in frontend user development and analytics collection.

One LLM model to rule them all

Next comes the LLM model to query the vector and produce a response. I appreciate AWS’s decision not to focus on one vendor, such as OpenAI, like Microsoft is doing. We know that models will improve performance, accuracy, and cost over time. With Bedrock, you have several to choose from, such as Titan, Llama, and Claude. Initially, I started with Claude v2.1 but needed to change to Claude Instant. The beauty of supporting multiple models was changing one line of code. A list of models is available at https://aws.amazon.com/bedrock/.

Building the Bedrock Component

I’m most familiar with Python and wanted to separate frontend and backend development. I landed on developing an API interface with Python and Lambda. The Python Lambda function takes the question submitted and generates a response to the model in JSON format. The response passes the answer, sources, and header OK information to the API.

I used the AWS API Gateway service to create the gateway interface between the front-end component and the Lambda function. The API allows the front end to be interchangeable because the response is JSON formatted.

Another Lambda function also stores prompt, response, session, and IP address in an AWS DynamoDB to provide analytics. This data helps prevent asking the same questions, track and evaluate the quality of responses, and perform other analytics.

Frontend Development

For the frontend chatbot interface, I used AWS Amplify to build it using Node.js and React. Rather than developing an interface from scratch, I leveraged the React Chatbot Kit framework to create the app. Since I’m not a user interface developer, the framework lets me focus on integration with the frontend and the AWS API Gateway service. As part of the service, you will want to capture feedback on the model’s response to the prompt. Simple thumbs up and down, with data being sent to an AWS DynamoDB.

Lessons Learned

Streaming vs. Complete Response

Depending on the model, you have two ways to get a response back. All models have an option to generate the completed response when done. The issue with this is that it may take 5-15 seconds for that response to be completed, so the frontend must wait for that promise and have a loader spinning, indicating something is happening. Some models support streaming response where the initial response is immediate, and you see words typing along the way until done. Both will work; it is just how you want to handle the user experience. Marcia Villalba covers this in the following YouTube video: https://youtu.be/NDtrk9Pm9w0?si=MhlVf7tkK3l_pj-H

Try Different Models

Use Bedrock’s support for multiple models to your advantage. Understand the different models, their use cases, and costs. Try several out against your data to determine the best fit.

Breakdown your application

You may be tempted to develop everything in a single application using Node.js, but I prefer to break down the architecture into components and microservices. This makes it easier to debug and test along the way, and it provides flexibility to swap out components rather than a complete redesign.

Infrastructure as Code

I used Cloudformation to design the entire stack. Cloudformation provides the capability to automate the provisioning of AWS resources and for code reusability. I can easily replicate the stack to develop a chatbot for another site without recoding. Also, use CodeCommit and CI/CD for a continuous workflow so Amplify automatically rebuilds the application when updated and committed.

Final Architecture

The final architecture is below. I hope that this article encourages you to test and build your own AI chatbot. This exercise improved my coding skills and taught me more about AI.